A joint Cybersecurity investigation has uncovered a vast, unsecured global network of Artificial Intelligence infrastructure. Researchers from SentinelOne’s SentinelLabs and Censys identified approximately 175,000 unique Ollama AI servers publicly accessible on the internet across 130 countries.

These systems, which run the open-source Ollama framework for deploying large language models, are spread across both commercial cloud and residential networks. The investigation concluded they operate outside the control of any centralized security or management, creating a significant and unintended layer of AI compute infrastructure.

Scope and Nature of the Exposure

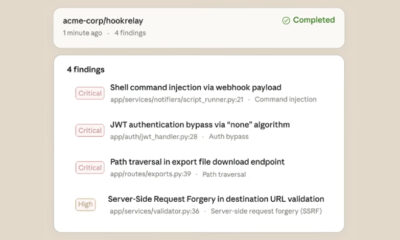

The exposed servers represent instances where the Ollama API is openly accessible without authentication on the default port. This configuration allows anyone with the server’s IP address to interact with the hosted AI models. They can run queries, modify existing models, or deploy entirely new ones.

This level of access poses multiple security risks. Unauthorized users could exploit the computational resources for malicious activities, such as generating phishing emails or misinformation at scale. They could also extract sensitive data from the models themselves or use the servers as a launch point for further network attacks.

Primary Risks and Potential Exploits

Cybersecurity experts highlight several concrete threats stemming from this exposure. A primary concern is “model theft,” where proprietary or fine-tuned AI models could be copied and downloaded by outsiders. Another risk is “data poisoning,” where bad actors could upload manipulated models to the server, corrupting its outputs for all future users.

Furthermore, these servers could be co-opted into a botnet for distributed denial-of-service (DDoS) attacks. They could also be used to serve malicious content or host illegal material, with the activity traced back to the legitimate server owner. The researchers noted that some exposed instances were already running suspicious or unauthorized models.

Background on the Ollama Platform

Ollama is a popular, freely available tool that simplifies the local deployment of open-source AI models, such as Llama 2 and Mistral. It packages model weights, configuration, and data into a single package. Its ease of use has contributed to rapid adoption by developers and hobbyists.

The default installation, however, often binds the service to all network interfaces. Unless users manually change the configuration or implement firewall rules, the server can become inadvertently accessible to the entire internet. The researchers’ scan specifically looked for this default, open state.

Responses and Recommended Actions

In response to the findings, security analysts urge all Ollama users to immediately verify their server’s exposure. The standard mitigation is to ensure the Ollama service is not bound to a publicly facing interface or is protected behind a firewall or VPN. Implementing authentication is also strongly recommended for any instance that must be network-accessible.

The researchers have engaged in responsible disclosure practices, notifying affected organizations where possible. They also provided detailed technical indicators of compromise (IOCs) to help network defenders identify exposed instances within their own infrastructure.

Broader Implications for AI Security

This incident underscores a growing challenge in the democratization of powerful AI tools. As deployment becomes easier, the responsibility for securing these systems often falls on individual users or small teams who may lack enterprise-grade security expertise. The scale of this exposure suggests a widespread gap between adoption and secure configuration.

It highlights a pattern similar to past exposures of databases, cameras, and IoT devices, where default settings and a lack of awareness lead to massive, unintended public access. The addition of generative AI capabilities amplifies the potential consequences, making the infrastructure a high-value target.

The investigation is ongoing, with researchers continuing to monitor the landscape. Further analysis is expected to detail the geographic distribution of exposed hosts and the specific models most commonly found on them. The cybersecurity community anticipates increased scanning and exploitation attempts by threat actors following this public disclosure, prompting calls for urgent remediation by server operators worldwide.

Source: SentinelLabs, Censys