A significant gap has emerged between the rapid adoption of artificial intelligence tools in business environments and the security measures designed to govern them. This discrepancy is creating new vulnerabilities as organizations struggle to apply effective oversight.

AI applications are now deeply integrated into daily corporate operations. They are embedded within software-as-a-service platforms, web browsers, coding assistants, browser extensions, and a growing array of unofficial tools. These tools, often adopted by employees without formal approval, are proliferating faster than many security teams can monitor.

Legacy Systems Prove Inadequate

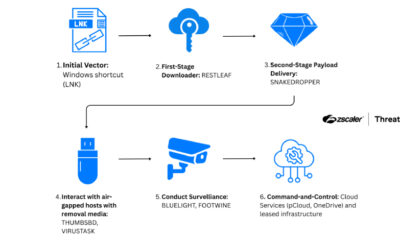

Despite this pervasive integration, most enterprises continue to rely on traditional security controls. These legacy systems frequently operate at a network or endpoint level, distant from the actual points of AI interaction. Consequently, they fail to monitor or manage how data is shared with AI models or how AI-generated content is utilized within business processes.

Security analysts note that traditional web gateways and data loss prevention tools are not built to interpret the unique protocols and data formats used by AI applications. This creates a blind spot where sensitive corporate information could be inadvertently exposed or where AI outputs might introduce compliance risks or misinformation.

The Rise of Unmanaged “Shadow AI”

The phenomenon of “shadow AI,” where employees use AI tools without official sanction or visibility from IT departments, compounds the problem. The ease of access to powerful AI through consumer-grade interfaces has accelerated this trend, making centralized governance increasingly difficult.

Industry reports indicate that a majority of workers now use some form of generative AI for tasks ranging from email drafting to code generation. However, formal organizational policies governing this usage often lag behind, if they exist at all.

Industry and Regulatory Response

In response to these challenges, a new category of security solutions focused on AI usage control is gaining attention. These platforms aim to operate closer to the point of AI interaction, providing visibility and policy enforcement specific to AI applications.

Simultaneously, regulatory bodies in multiple regions are beginning to draft guidelines for responsible AI use in enterprise settings. These frameworks are expected to mandate stricter controls on data privacy, algorithmic transparency, and output validation when using third-party AI services.

Moving Toward Integrated Governance

The evolving security landscape suggests that effective AI governance will require a integrated approach. This approach must combine updated acceptable use policies, employee training, and technical controls specifically designed for AI workflows. Experts emphasize that control points need to shift from the network perimeter to the applications and data layers where AI interactions actually occur.

Looking ahead, the convergence of AI-specific security tools and emerging regulatory standards is expected to define corporate AI strategy for the foreseeable future. Organizations are anticipated to accelerate investments in dedicated AI security posture management as the technology’s integration deepens and regulatory pressures increase. The development of industry-wide benchmarks for AI risk assessment is also considered a likely next step.

Source: Industry Analysis