Anthropic, a leading artificial intelligence research company, has initiated a limited release of a new security feature for its Claude AI assistant. The feature, named Claude code security, is designed to automatically scan software codebases for potential vulnerabilities and recommend specific fixes. This rollout marks a significant step in applying generative AI to the critical field of software security, aiming to help developers identify and remediate flaws more efficiently.

Limited Availability and Core Function

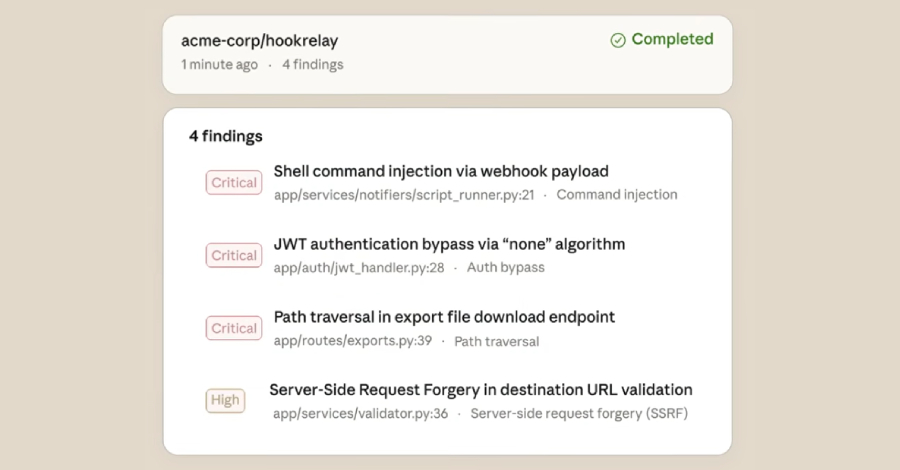

Currently, Claude Code Security is accessible only in a research preview format. The preview is being offered to Anthropic’s Enterprise and Team tier customers. The company describes the tool’s primary function as scanning code for security vulnerabilities and then suggesting targeted patches to address the identified issues. This positions the feature as a direct application of AI for proactive security within the software development lifecycle.

The introduction of this capability comes at a time when software supply chain attacks and vulnerabilities are a top concern for organizations globally. By integrating vulnerability scanning directly into a coding assistant, Anthropic seeks to provide a more seamless security workflow for development teams.

Context in the Competitive AI Landscape

Anthropic’s move follows broader industry trends where AI companies are expanding their offerings beyond general-purpose chatbots into specialized, professional tools. Rivals like OpenAI, with its ChatGPT and GPT-4 models, and GitHub, with its Copilot tool, have also integrated various levels of code analysis and generation. However, Anthropic’s explicit focus on security scanning as a dedicated feature represents a distinct product direction.

The company has built its reputation on developing AI systems with a strong emphasis on safety and reliability, principles it refers to as “Constitutional AI.” The launch of a security-focused tool aligns with this overarching mission, applying its AI research to a domain where accuracy and trust are paramount.

Potential Impact and Considerations

For developers and security professionals, AI-powered vulnerability scanning promises to augment manual code reviews and traditional static application security testing (SAST) tools. Proponents argue it could lead to faster identification of common security bugs, such as SQL injection or cross-site scripting flaws, early in the development process.

However, the effectiveness and reliability of such AI-generated security advice will be closely scrutinized. The field of application security requires a high degree of precision; false positives can waste developer time, while false negatives could leave dangerous vulnerabilities undiscovered. Anthropic’s decision to launch the feature as a limited research preview suggests an acknowledgment of these challenges, allowing for real-world testing and refinement based on user feedback.

Looking Ahead

Anthropic has not publicly announced a specific timeline for a general release of Claude Code Security. The company’s next steps will likely involve gathering data from the preview participants to improve the tool’s accuracy and usability. Broader availability for all Claude users may depend on the results of this initial testing phase. The development will be watched closely as part of the ongoing evolution of AI-assisted software development and its role in enhancing cybersecurity practices.

Source: Anthropic